I've already checked that disabling firewalld and setenforce 0 doesn't help in this scenario.

I had similar performance on CentOS7 so I wonder if these problems could be relative to this OS line. I was wondering about XFS filesystem being a bottleneck but I believe your CentOS7 templates are on ext4 so this would mean 'no'.

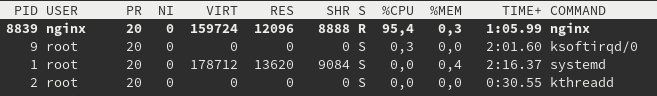

I did a funny test - created a 1G test file on my VPS, launched nginx and tried to download it from your test server, this is what happened to my server:

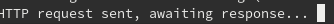

And the response on yours (as expected in such case):

@radmerc - I think I'm on the same node, running minimal Debian 9 installed ftom ISO, LVM on LUKS, openssh srrver, no other applications (no web server, no mysql, etc)

@AnthonySmith - I will put in a ticket to follow up with more details when I get a chance, but in case it might help diagnose, I'm seeing a few 'rcu_sched self-detected stall on CPU' entries via dmesg after the apt update && apt upgrade took over 30 minutes

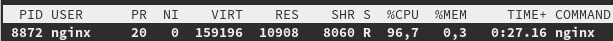

Well, I've restarted nginx as it wouldn't provide response for too long and did the test again. The download was going strong until around 77% (over 60MBps) and then it dropped to 200KBps and again:

@uptime said: @AnthonySmith - I will put in a ticket to follow up with more details when I get a chance, but in case it might help diagnose, I'm seeing a few 'rcu_sched self-detected stall on CPU' entries via dmesg after the apt update && apt upgrade took over 30 minutes

Ok, I am looking in to it anyway, it took 22 minutes here which included the time to create a swap file, which for first run i dont think is so bad.

I know you probably did check but you are using the virtio driver not the ide?

Unfortunately as I've mentioned previously - I already have the virtio block device drivers on.

I have however found something unusual and just confirmed different behavior between my VPS and the test VPS from @AnthonySmith

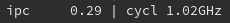

As you've seen from my previous posts I have some quite high CPU usage on basic tasks like simple file serving via nginx or downloading with wget. Now I noticed that the cycl value on atop is really low on my VPS (doesn't seem to go above ~120MHz) while it goes above 1GHz on the test VPS from @AnthonySmith (see below):

My VPS:

Test VPS:

Do you have any idea about possible reason behind this? I think maybe there is some power-saving problems, throttling or weird behavior of CPU governor?

Update:

Also, I've mentioned earlier that I've seen the slowdown happen over time (eg. 10-15hours of idling). I just did a force shutdown / boot procedure from the VPS control panel and this is what happened:

Now this doesn't exactly solve my problems but it seems to be a hint that they may be related to the CPU scaling or something around it.

Between CPU and RAM use, you're going to lose a lot of I/O in the name of having things stable. Throw Debian 8 on it (or Alpine, it won't be the fastest, but you can still tune your FS), lock it down, and call it a day.

My pronouns are asshole/asshole/asshole. I will give you the same courtesy.

Ok, confirming previous observations that things get slow after idling, here's my result after the night of the VPS doing nothing:

However, the results on @AnthonySmith test server are still fine.

I see such messages in dmesg: lowering kernel.perf_event_max_sample_rate the numbers go from 59000 to as low as 7000 as of current. But I have no idea why.

I'm experiencing the same thing though it seems to run more stable over the last days opposed to a few weeks ago. Didn't have time to put in a ticket (plus I'm paying peanuts and I'm using it to run Nextcloud which is not officially supported either..)

@AnthonySmith ticket # is 773306. The issue was with unbuffered writes and uncached reads. Not sure if it's the same node or issue, but seems quite similar so figured it could be of help

I am past tweaks to perf which turned out useless. Currently went with tuned which frankly I never had need to do, setting tuned-adm profile latency-performancewhich I believe puts a lot more effort on caching to memory I am currently experiencing 91,4MB/s on the same test file download. Now I wonder how this will work in long term and also if it'll help with other issues such like services taking long to respond. There is also possibility that something changed on the host rather than my guest and it's just matter of timing

Update:

So I've tried to go with 10GB file to see how this'd work when the memory fills and unfortunately (but actually as expected) it started to get really slow once it hit above the 3.2G on the mem/cache. PAG on atop started blinking red etc and the download speed started varying between 200KBps (when it hit the limit) to even up to 10MBps (prolly when it freed some mem space / wrote the buffers to the disk - it was a bit hard for me to focus on watching this atm).

I am going to try to reinstall CentOS8 with ext4 as FS and see if this would make any change.

Alright, after some long hours of idling it's all still working good. I just connected via ssh without problems and the wget goes really smooth. Compared to my previous installation I've removed all the LVM stuff and installed CentOS8 directly on an ext4 partition. I'll check if it continues to work good tomorrow and if so, I'll follow up with the nextcloud related stuff.

Alright, so the good news is - I've installed nextcloud and the uptime of the machine itself is past 24hours. Everything is still working, I've synced all my files without problems.

Bad news is - I have no idea why this works. As explained in previous message I've just installed CentOS8 but removed the LVM+XFS and instead installed it to basic ext4 partition. As mentioned in the first post - I've disabled dnf-makecache.timer on this setup aswell in order to lower the CPU/IO load. I didn't touch 'tuned' yet. I wish I could say 'it must've been XFS!' but as far as I know the CentOS7 template from the panel is by default setup on ext4 partition without any extras and I still experienced these problems on it (In fact - As I've also explained to @AnthonySmith in PM, I've moved to CentOS8 from the CentOS7 template because of these issues in the first place).

I'll update the topic after some longer run. If it works - great! If it doesn't - I'll accept defeat.

Well, it's working great so far. I guess the problem is solved I have no idea in the end but hope that at least some of the stuff mentioned in this topic will help others who might have similar issues. Thanks

Comments

I think I may be on the same node with a little 100 GB storage instance ...

currently am about 20 minutes into an

apt update && apt upgrade- seeing cpu steal time occasionally go up to 100%HS4LIFE (+ (* 3 4) (* 5 6))

Here is what i see from the test VPS to your IP (removed all that can be revealing for privacy)

And a 1GB file from leaseweb:

2020-01-03 17:19:00 (64.6 MB/s) - â1000mb.bin.1â saved [1000000000/1000000000]Pretty far from what you are seeing.

Anyway, I will try to do some re-balancing of things this month, if you are not happy with it though, just say and I will sort out a pro rated refund.

https://inceptionhosting.com

Please do not use the PM system here for Inception Hosting support issues.

I've already checked that disabling firewalld and setenforce 0 doesn't help in this scenario.

I had similar performance on CentOS7 so I wonder if these problems could be relative to this OS line. I was wondering about XFS filesystem being a bottleneck but I believe your CentOS7 templates are on ext4 so this would mean 'no'.

I did a funny test - created a 1G test file on my VPS, launched nginx and tried to download it from your test server, this is what happened to my server:

And the response on yours (as expected in such case):

@radmerc - I think I'm on the same node, running minimal Debian 9 installed ftom ISO, LVM on LUKS, openssh srrver, no other applications (no web server, no mysql, etc)

@AnthonySmith - I will put in a ticket to follow up with more details when I get a chance, but in case it might help diagnose, I'm seeing a few 'rcu_sched self-detected stall on CPU' entries via

dmesgafter theapt update && apt upgradetook over 30 minutesHS4LIFE (+ (* 3 4) (* 5 6))

Well, I've restarted nginx as it wouldn't provide response for too long and did the test again. The download was going strong until around 77% (over 60MBps) and then it dropped to 200KBps and again:

Ok, I am looking in to it anyway, it took 22 minutes here which included the time to create a swap file, which for first run i dont think is so bad.

I know you probably did check but you are using the virtio driver not the ide?

https://inceptionhosting.com

Please do not use the PM system here for Inception Hosting support issues.

Yup in my case

@AnthonySmith - just checked and I see ide driver being used for the disk (and virtio for network).

I'll switch the disk driver to virtio and see if that fixes anything

EDIT: seems back to normal performance after switching to virtio disk driver and rebooting.

Did an

scpon a 3 GB file to Stockholm and back, seeing about 100 mbit/s each wayNo more rcu_sched complaints in dmesg.

Not sure if or how these observations might relate to whatever @radmerc is looking at, but my storage instance seems to be working okay again.

HS4LIFE (+ (* 3 4) (* 5 6))

@AnthonySmith @uptime

Unfortunately as I've mentioned previously - I already have the virtio block device drivers on.

I have however found something unusual and just confirmed different behavior between my VPS and the test VPS from @AnthonySmith

As you've seen from my previous posts I have some quite high CPU usage on basic tasks like simple file serving via nginx or downloading with wget. Now I noticed that the cycl value on atop is really low on my VPS (doesn't seem to go above ~120MHz) while it goes above 1GHz on the test VPS from @AnthonySmith (see below):

My VPS:

Test VPS:

Do you have any idea about possible reason behind this? I think maybe there is some power-saving problems, throttling or weird behavior of CPU governor?

Update:

Also, I've mentioned earlier that I've seen the slowdown happen over time (eg. 10-15hours of idling). I just did a force shutdown / boot procedure from the VPS control panel and this is what happened:

Now this doesn't exactly solve my problems but it seems to be a hint that they may be related to the CPU scaling or something around it.

Between CPU and RAM use, you're going to lose a lot of I/O in the name of having things stable. Throw Debian 8 on it (or Alpine, it won't be the fastest, but you can still tune your FS), lock it down, and call it a day.

My pronouns are asshole/asshole/asshole. I will give you the same courtesy.

I have experienced this "waking up syndrome" before (not with Inception). Could not get it resolved and finally migrated off of it.

Even fresh templated install with no services also yielded same results

I bench YABS 24/7/365 unless it's a leap year.

@cybertech This is possibly a bad cache design on a busy node. Obviously, keep that bitch running 24/7 and your throughput will be fine.

My pronouns are asshole/asshole/asshole. I will give you the same courtesy.

Yes indeed, but damn it doesn't make sense if I install a webpanel blazing fast then have 5XX error when logging in 5mins later.

I bench YABS 24/7/365 unless it's a leap year.

Ok, confirming previous observations that things get slow after idling, here's my result after the night of the VPS doing nothing:

However, the results on @AnthonySmith test server are still fine.

I see such messages in dmesg:

lowering kernel.perf_event_max_sample_ratethe numbers go from 59000 to as low as 7000 as of current. But I have no idea why.I'm experiencing the same thing though it seems to run more stable over the last days opposed to a few weeks ago. Didn't have time to put in a ticket (plus I'm paying peanuts and I'm using it to run Nextcloud which is not officially supported either..)

@AnthonySmith ticket # is 773306. The issue was with unbuffered writes and uncached reads. Not sure if it's the same node or issue, but seems quite similar so figured it could be of help

I am past tweaks to perf which turned out useless. Currently went with tuned which frankly I never had need to do, setting

tuned-adm profile latency-performancewhich I believe puts a lot more effort on caching to memory I am currently experiencing91,4MB/son the same test file download. Now I wonder how this will work in long term and also if it'll help with other issues such like services taking long to respond. There is also possibility that something changed on the host rather than my guest and it's just matter of timingUpdate:

So I've tried to go with 10GB file to see how this'd work when the memory fills and unfortunately (but actually as expected) it started to get really slow once it hit above the 3.2G on the mem/cache. PAG on atop started blinking red etc and the download speed started varying between 200KBps (when it hit the limit) to even up to 10MBps (prolly when it freed some mem space / wrote the buffers to the disk - it was a bit hard for me to focus on watching this atm).

I am going to try to reinstall CentOS8 with ext4 as FS and see if this would make any change.

Alright, after some long hours of idling it's all still working good. I just connected via ssh without problems and the wget goes really smooth. Compared to my previous installation I've removed all the LVM stuff and installed CentOS8 directly on an ext4 partition. I'll check if it continues to work good tomorrow and if so, I'll follow up with the nextcloud related stuff.

Alright, so the good news is - I've installed nextcloud and the uptime of the machine itself is past 24hours. Everything is still working, I've synced all my files without problems.

Bad news is - I have no idea why this works. As explained in previous message I've just installed CentOS8 but removed the LVM+XFS and instead installed it to basic ext4 partition. As mentioned in the first post - I've disabled dnf-makecache.timer on this setup aswell in order to lower the CPU/IO load. I didn't touch 'tuned' yet. I wish I could say 'it must've been XFS!' but as far as I know the CentOS7 template from the panel is by default setup on ext4 partition without any extras and I still experienced these problems on it (In fact - As I've also explained to @AnthonySmith in PM, I've moved to CentOS8 from the CentOS7 template because of these issues in the first place).

I'll update the topic after some longer run. If it works - great! If it doesn't - I'll accept defeat.

Most templates I've seen are actually XFS based, but I don't know what the heck @AntGoldFish did to his.

My pronouns are asshole/asshole/asshole. I will give you the same courtesy.

Well, it's working great so far. I guess the problem is solved I have no idea in the end but hope that at least some of the stuff mentioned in this topic will help others who might have similar issues. Thanks

I have no idea in the end but hope that at least some of the stuff mentioned in this topic will help others who might have similar issues. Thanks