[HOWTO] Setup AMD iGPU passthrough with VirtFusion

iGPU passthrough with VirtFusion

I've spend quite a lot of time setting up iGPU passthrough with an AMD processor. It was super easy with Intel's iGPU (VT-d) but not that easy with a Ryzen APU. Also thanks a lot for @VirtFusion for your efforts to implement such functionalities. In general this works quite similar with an external GPU or any other PCIe device, you just need to regroup the iommo-groups.

Guide

Enable IOMMU in Motherboard UEFI

As a first step, make sure that iommo is enabled in your motherboard's uefi. It should be really enabled and not in auto mode because I noticed that for some motherboards (e.g. MSI) auto doesn't work for some reason but enabled does.

Install OS and VirtFusion

Perform an installation from ISO without desktop environment (in theory you can use it along with a desktop programm but this will install packages which interfer with our setup). Install VirtFusion and configure networking and everything as described in their docs. Do not create a VM however. Also install pciutils which might not be part of a default installation but we'll need it later.

Server setup

Now comes the actual part of the passthrough procedure.

The hypervisor may not load any drivers that activate the iGPU because the iGPU can only be accessed by one driver per startup. We need to tell the hypervisor kernel to not load any video modules by appending the following to the line GRUB_CMDLINE_LINUX_DEFAULT=:

# nano /etc/default/grub

amd_iommu=on initcall_blacklist=sysfb_init video=simplefb:off video=vesafb:off video=efifb:off video=vesa:off disable_vga=1 textonly vfio_iommu_type1.allow_unsafe_interrupts=1 kvm.ignore_msrs=1 modprobe.blacklist=amdgpu,snd_hda_intel

Not all those flags might be necessary but they usually don't have any negative effects if they're set though they're not needed.

In a next step we need to load vfio drivers which are used as passthrough drivers that host the iGPU as device in the virtual machine later.

DEBIAN

# nano /etc/modules

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

RHEL

# vi /etc/modules-load.d/vfio-pci.conf

vfio

vfio_iommu_type1

vfio-pci

vfio_virqfd

Then we need to tell vfio which devices it should pass through. You can look up the device IDs of the iommo-group through pciutils with lscpi -nnv. Note that you have to list the IDs of all devices of the iommo-group to be passed on even if you don't use the sound device for instance.

# nano /etc/modprobe.d/vfio.conf

options vfio-pci ids=1002:1863,1002:1864

Then we update the grup config to reflect the new settings and restart the server:

update-grub2 (RHEL: grub2-mkconfig -o /boot/grub2/grub.cfg)

update-initramfs -u -k all

reboot now

To verify you were successfull, run lspci -nnv and scroll down until you find your VGA compatible device and Audio device. If it says Kernel driver in use: vfio-pci you're all set.

VirtFusion setup

After having configured the hypervisor, it's time to create our first virtual machine in VirtFusion and configure it to use the iGPU through the previously created vfio virtual-devices.

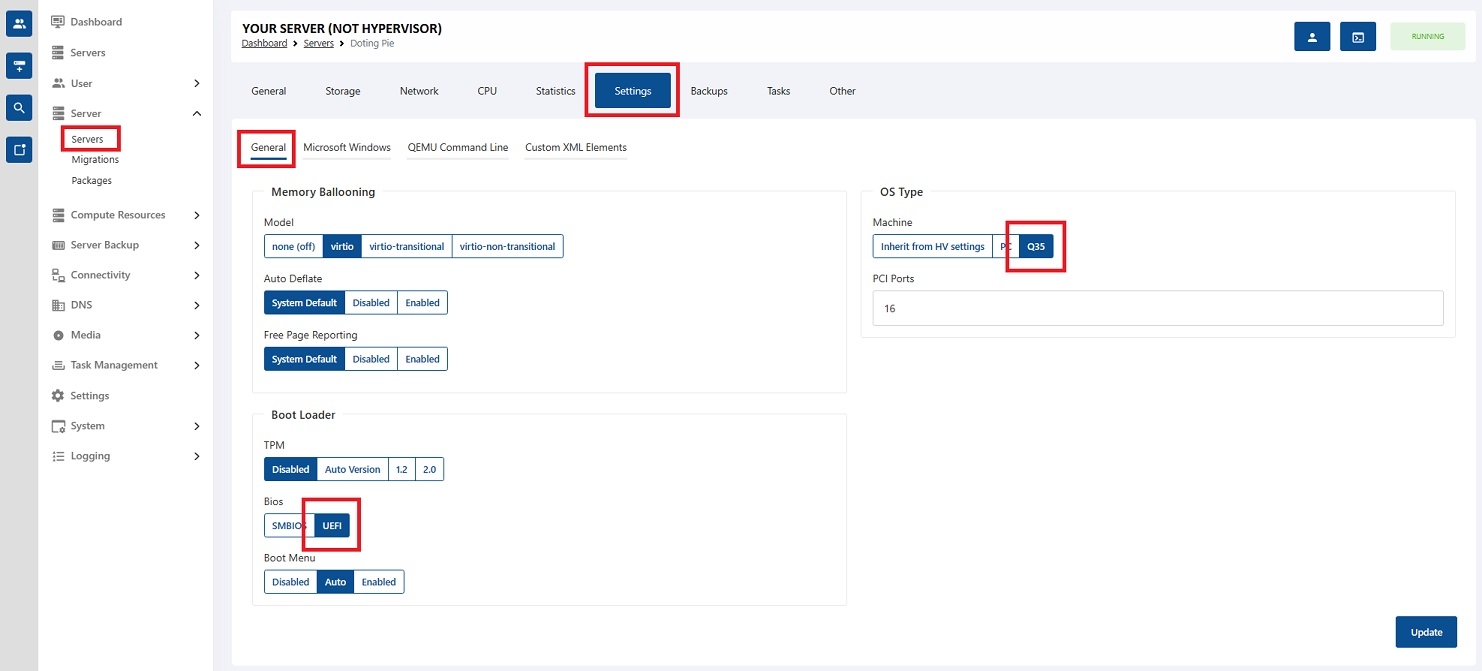

It may work with seabios (VirtFusion: "SMBIOS") and i440FX (VirtFusion: "PC") but only on compatibility layer hence we use Q35 (which supports PCIe and not only PCI like i440FX) and UEFI (because the vbios, see below, will be available for UEFI only on modern systems).

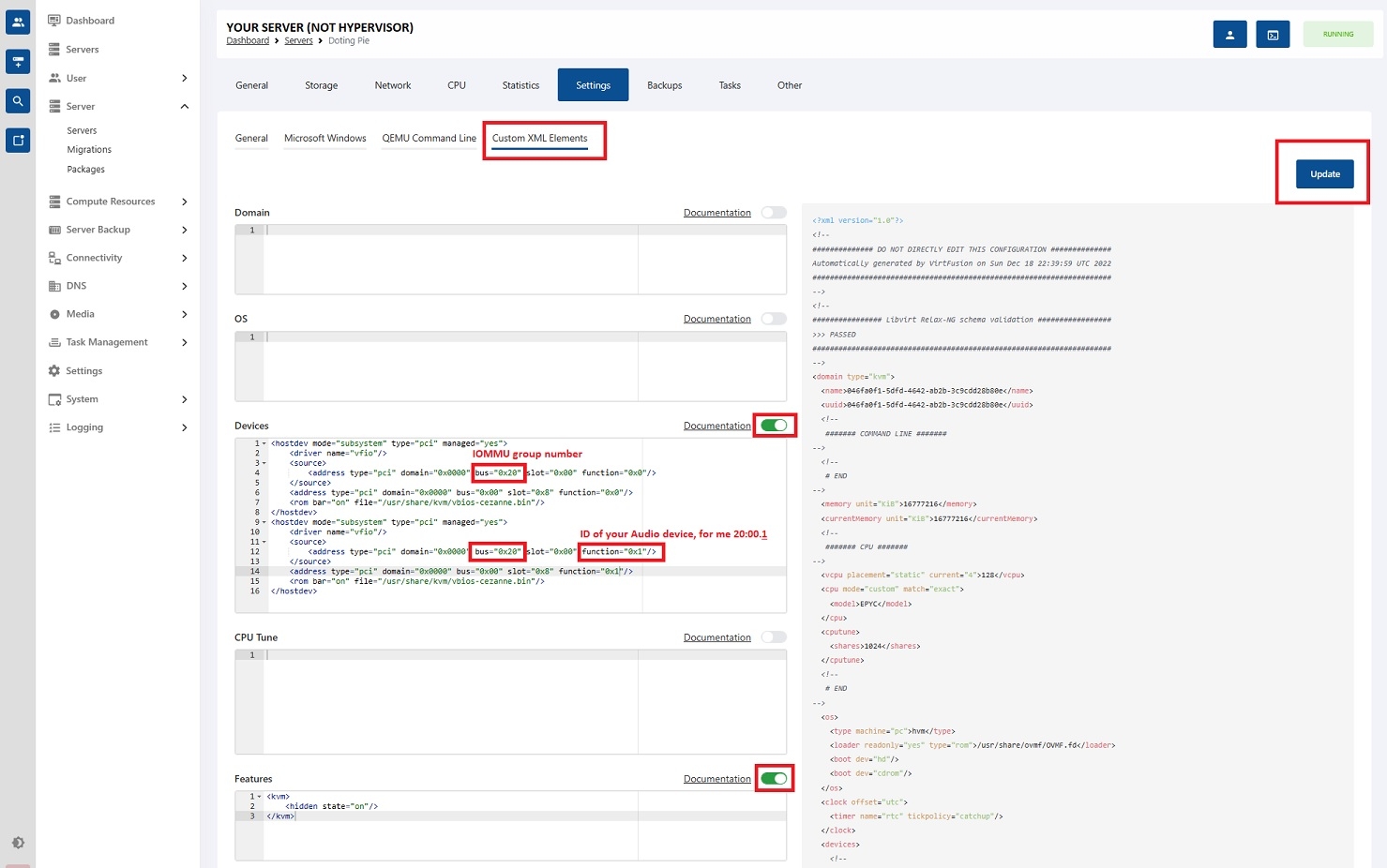

The environmental variables of the virtual machine are now configured to work with vfio and an optional modern vbios. You can extract the vbios from the bios file of your motherboard vendor with e.g. GPU-Z. I've stored my vbios of the APU in this example at /usr/share/kvm/vbios-cezanne.bin.

In order to make libvirt create the hostdevice for us, we need to configure it in the corresponding fields in VirtFusion. For compatibility reasons you can hide the kvm status but this isn't mendatory. Make sure to adapt the iommu-group number and the functions id to the one of your config. You get the values with lscpi -nnv.

<hostdev mode="subsystem" type="pci" managed="yes">

<driver name="vfio"/>

<source>

<address type="pci" domain="0x0000" bus="0x20" slot="0x00" function="0x0"/>

</source>

<address type="pci" domain="0x0000" bus="0x00" slot="0x8" function="0x0"/>

<rom bar="on" file="/usr/share/kvm/vbios-cezanne.bin"/>

</hostdev>

Enable the custom elements you've filled out and update the config. Then restart the virtual machine.

Do not assign a PCIe device (in this case the iGPU) to more than one virtual machine at a time or you'll get permission errors from vfio or driver errors.

That's it. I hope this guide is helpful to you.

Comments

very nice, impressive. now let's see paul allen's card the hardware compatibility list

Fuck this 24/7 internet spew of trivia and celebrity bullshit.

It should work with Renoir APUs and newer and all chipsets starting x400er series.